GC-TTT - Test-time Offline Reinforcement Learning on Goal-related Experience

Abstract

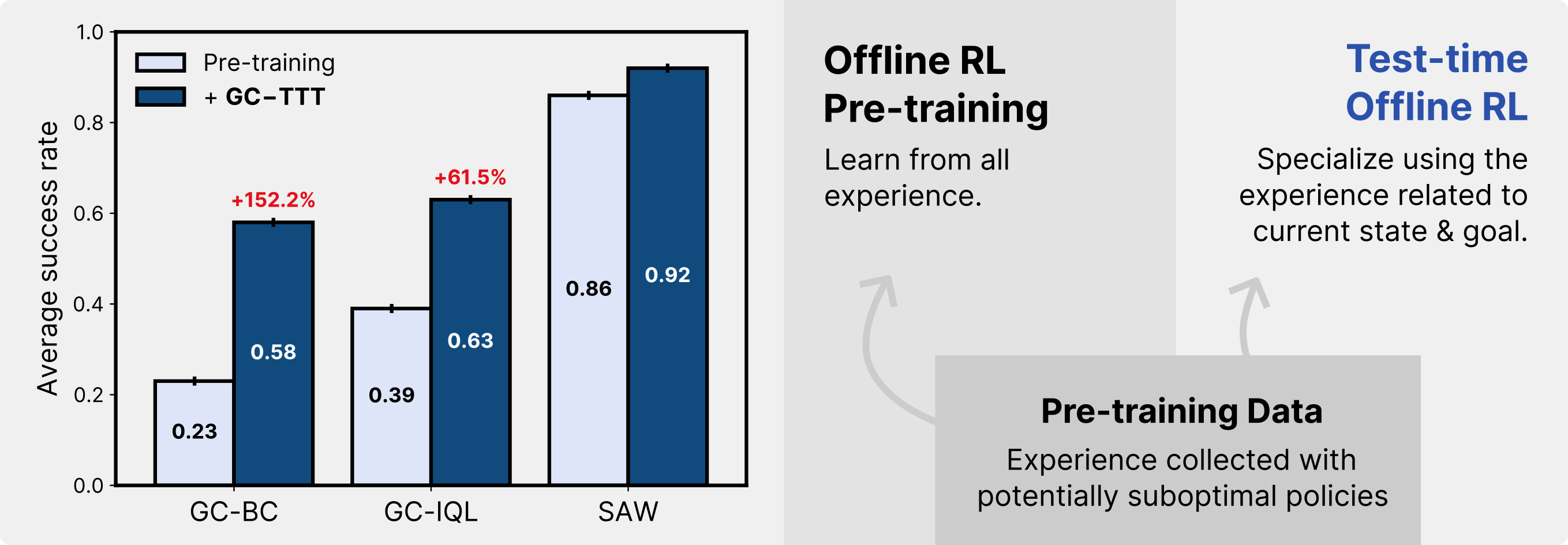

Foundation models compress a large amount of information in a single, large neural network, which can then be queried for individual tasks. There are strong parallels between this widespread framework and offline goal-conditioned reinforcement learning algorithms: a universal value function is trained on a large number of goals, and the policy is evaluated on a single goal in each test episode. Extensive research in foundation models has shown that performance can be substantially improved through test-time training, specializing the model to the current goal. We find similarly that test-time offline reinforcement learning on experience related to the test goal can lead to substantially better policies at minimal compute costs. We propose a novel self-supervised data selection criterion, which selects transitions from an offline dataset according to their relevance to the current state and quality with respect to the evaluation goal. We demonstrate across a wide range of high-dimensional loco-navigation and manipulation tasks that fine-tuning a policy on the selected data for a few gradient steps leads to significant performance gains over standard offline pre-training. Our goal-conditioned test-time training (GC-TTT) algorithm applies this routine in a receding-horizon fashion during evaluation, adapting the policy to the current trajectory as it is being rolled out. Finally, we study compute allocation at inference, demonstrating that, at comparable costs, GC-TTT induces performance gains that are not achievable by scaling model size.

Method: Goal-conditioned Test-time Training (GC-TTT)

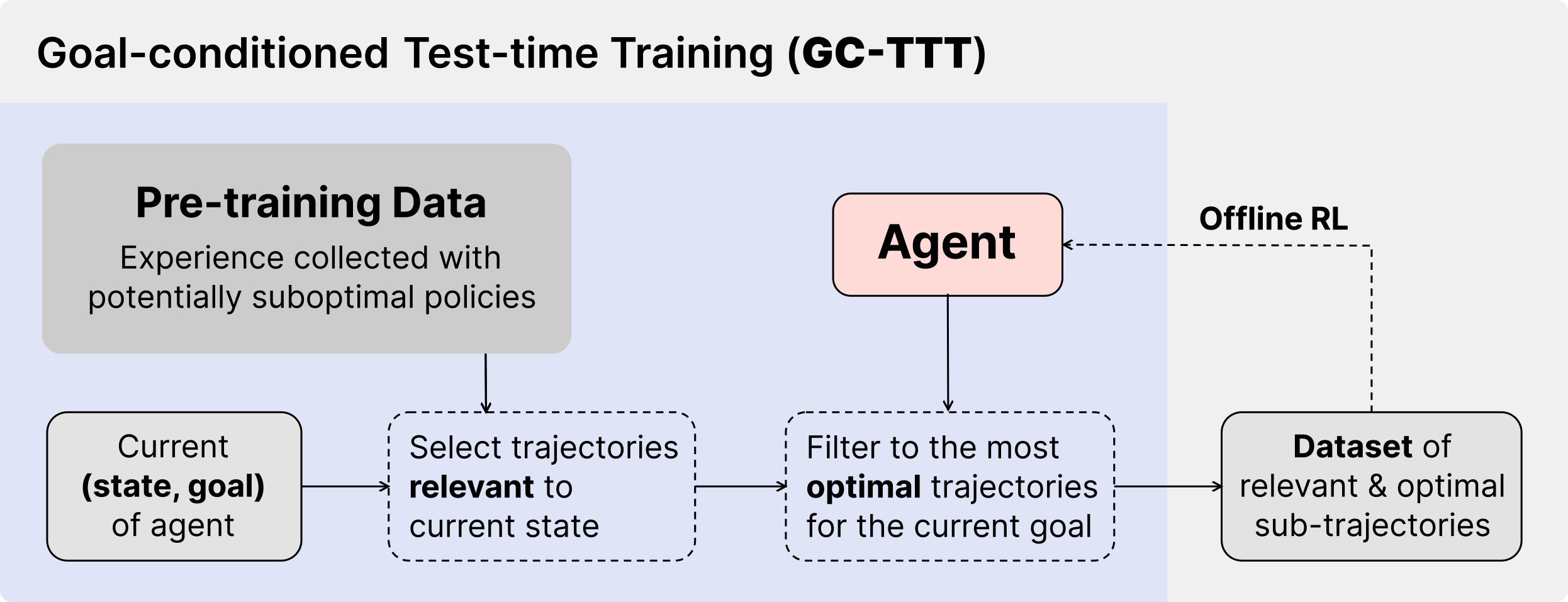

We propose to dynamically fine-tune the policy during evaluation, using data from the pre-training dataset $\mathcal{D}$. Our method, Goal-conditioned Test-time Training (GC-TTT), carefully selects a subset of data $\mathcal{D}(s, g^*)$ that is "close" to the agent's current state $s$ and "optimal" for reaching its current goal $g^*$. The policy is then fine-tuned on this small, specialized dataset for a few gradient steps.

This process has two key components:

- What to train on? (Data Selection): We first filter the dataset to find sub-trajectories that are relevant to the agent's current state, i.e., trajectories that start nearby (using a distance function $d(s, s_1) < \epsilon$). Then, we filter these relevant trajectories to find the ones that are most optimal for the current test goal $g^*$. We measure optimality using an H-step return estimate, $\hat{V}$, which combines the rewards along the sub-trajectory with the critic's value estimate of its final state. We select the trajectories in the top $q$-th percentile of this value.

- When to train? (Receding Horizon Training): We apply this fine-tuning process in a receding-horizon fashion. Every $K$ steps, we reset the policy weights back to the original pre-trained ones. We then perform data selection based on the new current state and fine-tune the policy again. This allows the agent to dynamically adapt its policy as it moves through the environment and correct for any deviations.

Results

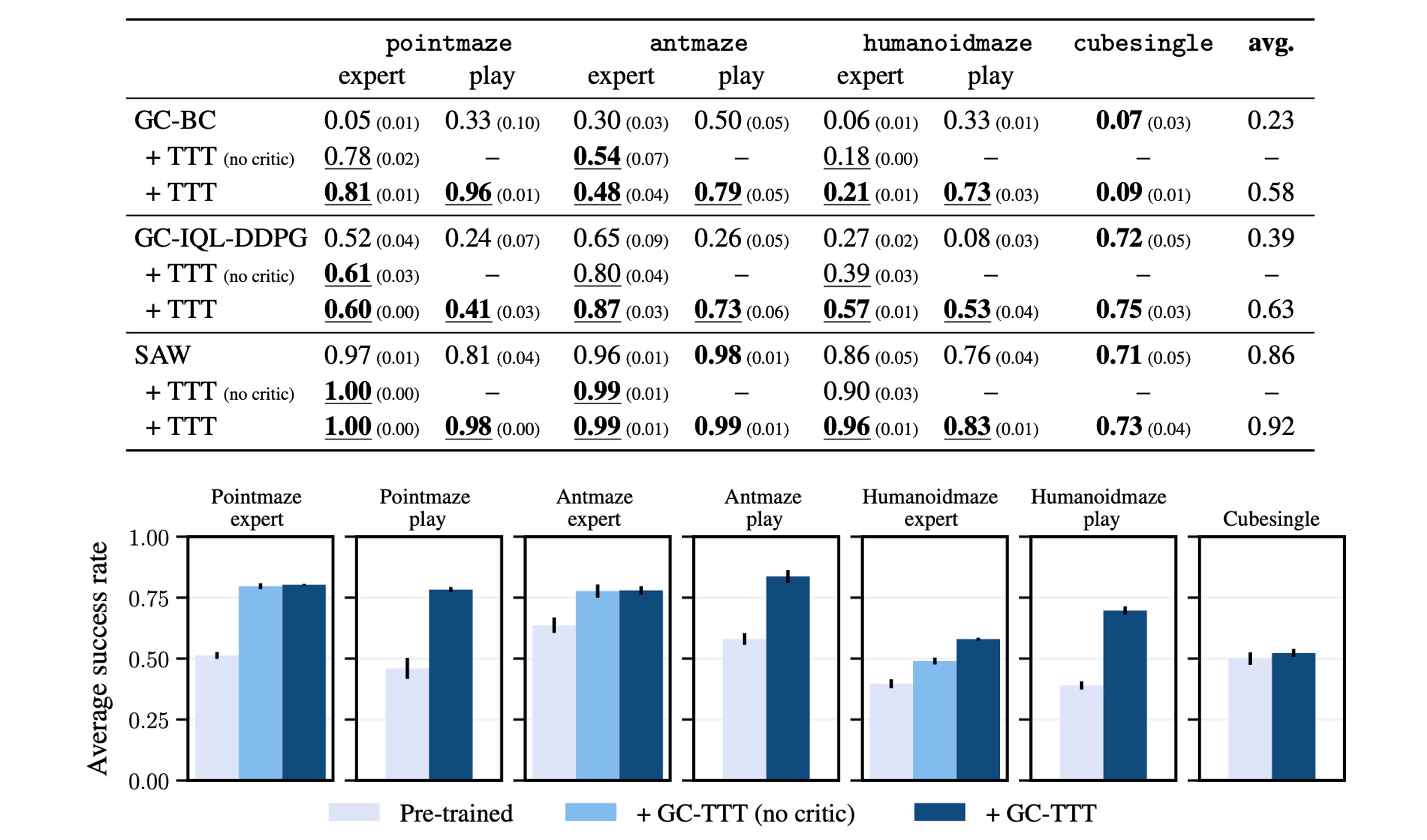

We evaluated GC-TTT on a range of loco-navigation (pointmaze, antmaze, humanoidmaze) and manipulation (cubesingle) tasks from OGBench. As shown in Table 1, GC-TTT provides substantial improvements when applied on top of various offline RL backbones (GC-BC, GC-IQL, and SAW). On average, GC-TTT improved the success rate of GC-BC from 0.23 to 0.58 (+152.2%) and GC-IQL from 0.39 to 0.63 (+61.5%).

Ablation Studies

We conducted several ablations to understand why GC-TTT works. We found:

- Data selection is crucial (Fig. 6, left): Both relevance and optimality filters are necessary. Training on random data, or data that is only relevant but not optimal (or vice-versa), fails to produce significant gains.

- TTT frequency matters (Fig. 6, middle): Performance scales with the frequency of test-time updates. More complex environments like Antmaze benefit from more frequent updates (e.g., every 100 steps).

- GC-TTT scales better than model size (Fig. 6, right): We compared allocating more compute at test-time by (a) increasing TTT frequency or (b) scaling the policy network size. GC-TTT (blue line) consistently outperforms simple model scaling (grey line) at matched inference FLOPs.

BibTeX

@misc{bagatella2025testtime,

title={Test-time Offline Reinforcement Learning on Goal-related Experience},

author={Marco Bagatella* and Mert Albaba* and Jonas Hübotter and Georg Martius and Andreas Krause},

year={2025},

eprint={2507.18809},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2507.18809},

}