NIL - No-data Imitation Learning

We teach robots to move by showing them AI-generated videos, with no need for real-world motion data.

Abstract

Acquiring physically plausible motor skills across diverse and unconventional morphologies—including humanoid robots, quadrupeds, and animals—is essential for advancing character simulation and robotics. Traditional methods like reinforcement learning (RL) are task-specific and require extensive reward engineering. Imitation learning offers an alternative but relies on high-quality expert demonstrations, which are difficult to obtain for non-human morphologies. We propose NIL (No-data Imitation Learning), a data-independent approach that learns 3D motor skills from 2D videos generated by pre-trained video diffusion models. We guide the learning process by calculating a reward based on the similarity between video embeddings from vision transformers and the Intersection over Union (IoU) of segmentation masks. We demonstrate that NIL outperforms baselines trained on 3D motion-capture data in humanoid locomotion tasks, effectively replacing data collection with data generation.

Method

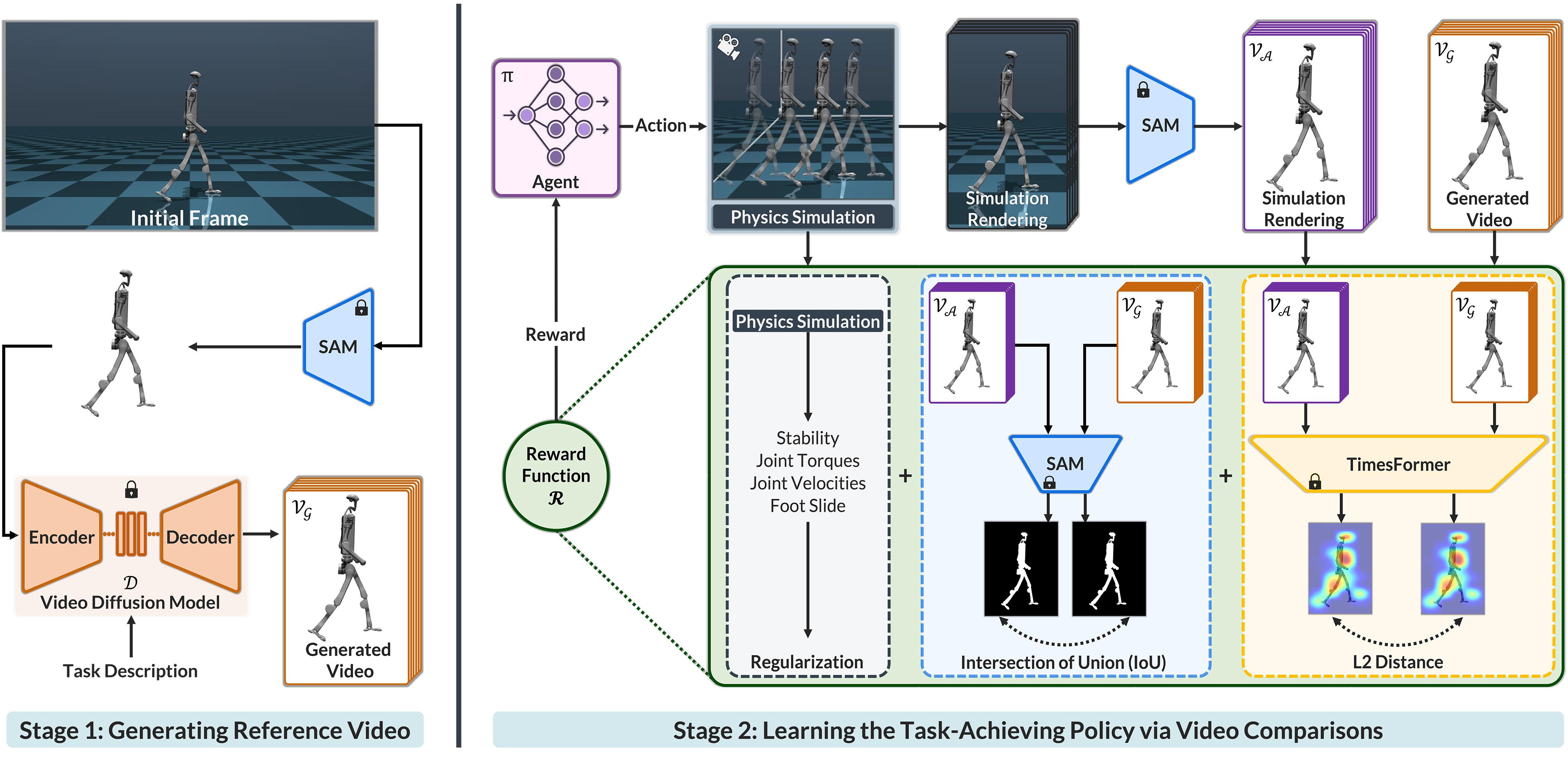

No-data Imitation Learning (NIL) learns physically plausible 3D motor skills from 2D videos generated on-the-fly. The process has two stages:

- Stage 1: Reference Video Generation. Given an initial frame of the robot and a text prompt (e.g., "Robot is walking"), a pre-trained video diffusion model generates a reference video of the desired skill.

- Stage 2: Policy Learning via Video Comparison. A control policy is trained in a physical simulator. The reward function encourages the agent to mimic the generated video by measuring the similarity between the agent's rendered video and the reference video.

This reward combines three key components:

- Video Encoding Similarity: The L2 distance between video embeddings from a TimeSformer model.

- Segmentation Mask Similarity: The Intersection over Union (IoU) between the agent's segmentation masks in both videos.

- Regularization: Penalties on joint torques, velocities, and foot-sliding to ensure smooth, stable motion.

Results

Comparison to Baselines

NIL successfully learns locomotion for various robots, including humanoids and quadrupeds. Crucially, NIL achieves this without any expert motion data, yet it outperforms state-of-the-art methods that are trained on 25 curated 3D motion-capture demonstrations. Below, we compare NIL (trained on generated video) to AMP (trained on 3D MoCap data).

Unitree H1 Humanoid and Unitree A1 Quadruped

Unitree H1 Humanoid and Talos Humanoid

Importance of Reward Components

Our reward function combines video similarity, IoU similarity, and regularization. Removing any component degrades performance, leading to jittery, distorted, or suboptimal motion. Using all components together produces a stable and natural walking gait.

Impact of Video Diffusion Models

The performance of NIL is directly linked to the quality of the underlying video diffusion model. As these models improve, NIL's ability to learn complex and natural behaviors also improves, demonstrating a promising path for future progress.

Comparison of Different Models

Effect of Model Improvements

BibTeX

@misc{albaba2025nilnodataimitationlearning,

title={NIL: No-data Imitation Learning by Leveraging Pre-trained Video Diffusion Models},

author={Mert Albaba and Chenhao Li and Markos Diomataris and Omid Taheri and Andreas Krause and Michael Black},

year={2025},

eprint={2503.10626},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2503.10626},

}