RILe - Reinforced Imitation Learning

Abstract

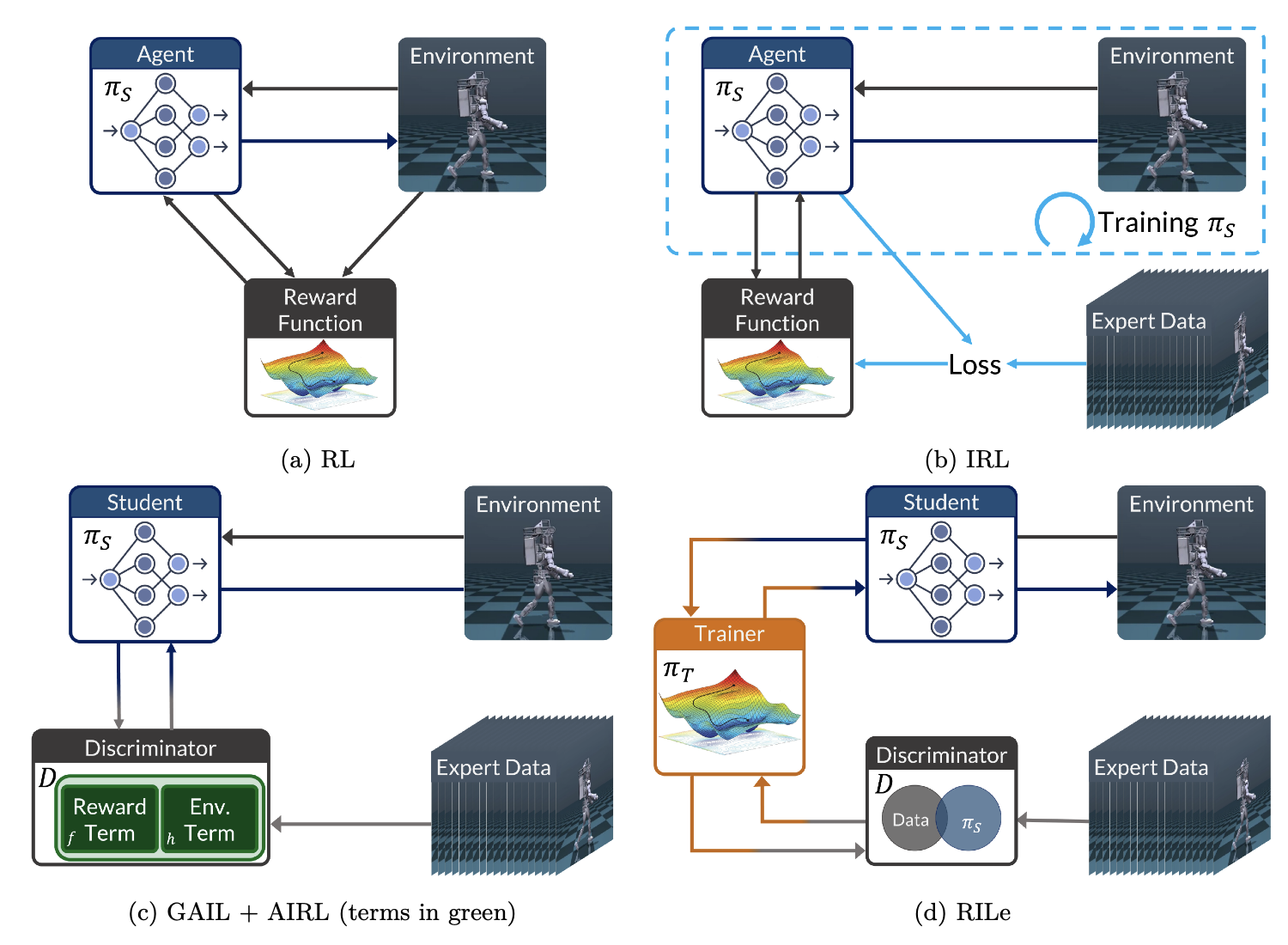

Acquiring complex behaviors is essential for artificially intelligent agents, yet learning these behaviors in high-dimensional settings, like robotics, poses a significant challenge due to the vast search space. There are three main approaches that address this challenge: 1. Reinforcement learning (RL) defines a reward function, which requires extensive manual effort, 2. Inverse reinforcement learning (IRL) uncovers reward functions from expert demonstrations but relies on a computationally expensive iterative process, and 3. Imitation learning (IL) directly compares an agent's actions with expert demonstrations; however, in high-dimensional environments, such binary comparisons often offer insufficient feedback for effective learning. To address the limitations of existing methods, we introduce RILe (Reinforced Imitation Learning), a framework that learns a dense reward function efficiently and achieves strong performance in high-dimensional tasks. Building on prior methods, RILe combines the granular reward function learning of IRL and computational efficiency of IL. Specifically, RILe introduces a novel trainer-student framework: the trainer distills an adaptive reward function, and the student uses this reward signal to imitate expert behaviors. Uniquely, the trainer is a reinforcement learning agent that learns a policy for generating rewards. The trainer is trained to select optimal reward signals by distilling signals from a discriminator that judges the student's proximity to expert behavior. We evaluate RILe on general reinforcement learning benchmarks and robotic locomotion tasks, where RILe achieves state-of-the-art performance.

Method

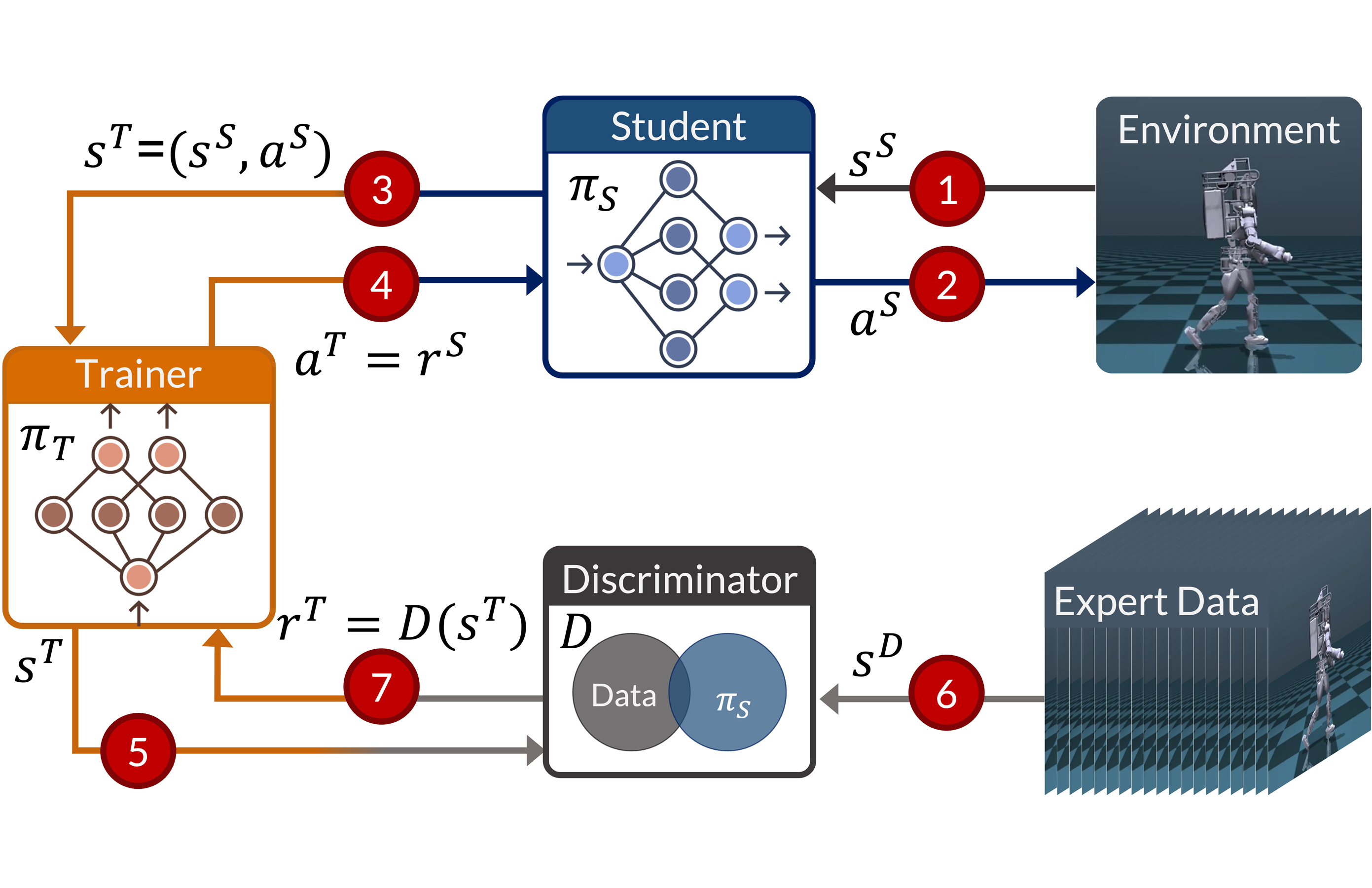

We propose Reinforced Imitation Learning (RILe) to jointly learn a reward function and a policy that emulates expert-like behavior within a single learning process. RILe introduces a novel trainer-student dynamic, as illustrated in Figure 2. The student agent learns an action policy by interacting with the environment, while the trainer agent learns a reward function to guide the student. Both agents are trained simultaneously using reinforcement learning, with feedback from an adversarial discriminator.

Framework Components

Student Agent: The student learns a policy $\pi_{S}$ just like in a standard Markov Decision Process (MDP). However, instead of a pre-defined reward, it is guided by the trainer's policy $\pi_{T}$. At each step, the trainer's action $a^{T}$ directly serves as the student's reward $r^{S}$. The student's goal is to maximize the expected rewards generated by the trainer.

Discriminator: The discriminator $D_{\phi}$ is a classifier trained to distinguish between state-action pairs from the expert $\tau_{E}$ and pairs from the student $\pi_{S}$. Its objective is the standard binary cross-entropy loss used in Adversarial Imitation Learning (AIL).

Trainer Agent: The trainer agent learns a policy $\pi_{T}$ that outputs reward signals for the student. It observes the student's state-action pair $s^{T}=(s^{S},a^{S})$ and outputs a scalar reward $a^{T}$. The trainer is, in turn, rewarded based on how well its action $a^{T}$ matches the discriminator's evaluation $D_{\phi}(s^{T})$. This setup trains the trainer to learn a reward function that effectively guides the student toward expert-like behavior.

Results

We evaluated RILe across several studies, including comparisons of computational cost, reward function dynamics, and performance on high-dimensional continuous control tasks.

Performance in Continuous Control Tasks

We evaluated RILe on two sets of continuous control benchmarks. First, on standard MuJoCo tasks, RILe achieves the highest final reward in three of the four environments, particularly showing an advantage in the high-dimensional Humanoid task. Second, on a more challenging high-dimensional robotic locomotion benchmark (LocoMujoco) that uses noisy, state-only data, RILe obtains the highest score across all seven tasks.

Reward Function Evaluation

To understand how RILe's reward-learning differs from baselines, we visualized the learned reward functions in a maze environment. Figure 4 shows that RILe's reward function dynamically adapts to the student's current policy, providing context-sensitive guidance that changes as the student improves. In contrast, the reward functions of GAIL and AIRL (which are tied directly to the discriminator output) remain relatively static throughout training.

Computational Cost and Performance Trade-offs

We compared RILe's computational cost against AIL (GAIL) and IRL (GCL, REIRL) methods. As shown in Figure 5, GAIL is computationally efficient but achieves limited peak performance, especially in complex tasks. Conversely, IRL methods achieve high rewards but require orders of magnitude more gradient steps. RILe successfully bridges this gap, achieving the high performance characteristic of IRL while maintaining sample efficiency much closer to that of AIL.

Impact of Advanced Discriminators

The quality of the discriminator is critical. We analyzed this by replacing the standard discriminator in both RILe and GAIL with a more advanced diffusion-based model (from DRAIL). As shown in Figure 6, RILe (orange) effectively leverages the advanced discriminator (red) to improve its final performance. In contrast, GAIL's performance remains stable, suggesting it cannot capitalize on the better feedback. Furthermore, RILe's reward signal (CPR, plot c) maintains a positive correlation with true task performance, while GAIL's reward signal eventually becomes misaligned (negative correlation).

BibTeX

@misc{albaba2025rilereinforcedimitationlearning,

title={RILe: Reinforced Imitation Learning},

author={Mert Albaba and Sammy Christen and Thomas Langarek and Christoph Gebhardt and Otmar Hilliges and Michael J. Black},

year={2025},

eprint={2406.08472},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2406.08472},

}